Over a year ago I found out about a neat procedural texturing algorithm inspired by principles of wave function collapse from quantum physics. It really caught my attention as a novel way to do procedural texturing using a human-designed algorithm (which is quickly going out of fashion). The full details of what science has learned about how this actually works are too much for this post, so I’ll try to summarize. Quantum objects like electrons exist in a state called a superposition where they are thought to be in all possible states (positions around the nucleus, in this case) at the same time until observed. This state of being in multiple places at once is called superposition. When the electron’s state is observed (i.e. something about it is measured) its superposition collapses into a definite state based on some inherent probability distribution. The probability distribution is called the wave function. The work that I based mine off of is available on GitHub, and it goes into a lot more detail about the actual algorithm. Definitely check it out for more details and examples.

https://github.com/mxgmn/WaveFunctionCollapse

The wave function collapse procedural texturing algorithm uses this concept to synthesize a texture using either an input set of tiles, or an input texture from which it builds a locally-similar texture of theoretically any size. The output texture is made of tiles, which are the quantum-like objects in this algorithm. Each output tile is in a superposition of all possible input tiles at once, and with each iteration of the algorithm a tile is observed, collapsing it into a single definite tile, and the consequences of that (neighbor constraints, etc) propagate out until the superposition is again in a valid state. When all tiles have been observed the algorithm is complete.

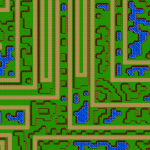

Here is an example of a system using specific tiles like you’d see in an old 2d game. The input tiles are the first image, and are stitched together via the cycle of observing the wave function and then propagating the neighbor constraints out from the observed tile.

-

Summer tileset

©

-

Summer generated texture 2

©

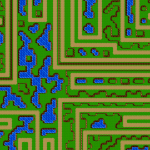

-

Summer generated texture 1

©

The other method takes a texture as its input, chops that texture up into all possible unique tiles of a given size, and then overlaps them with each other to generate the final image. The constraint in this method is that tiles that partially overlap each other must have matching colors on the parts that overlap. This creates output images that are random but locally have the same details as the input texture.

These results are pretty damn cool in my opinion. It’s intuitively visible that the output pictures are similar to the inputs but each is unique. Like the tiled examples above they don’t always make great sense from a game design perspective, but there may be some tweaks or other constraints that could be applied to this algorithm to generate something a little more usable.

Misc thoughts / observations:

- My c++ implementation is much faster than the original C# code. As is now though I don’t think it’s fast enough for real time streaming, especially the overlapping method. That would require either extremely large tiles or a new unit and constraint system that isn’t part of this implementation.

- The algorithm can fail and does so at a pretty high rate. It’s entirely possible there are still bugs in my implementation but many seeds fail to result in a proper observation. This is another part that makes it iffy to use as-is for game content, as I’d really want my procedural content algorithms to never fail or have a very low failure rate.

- The algorithm increases significantly in both memory and runtime cost as the number of discrete states (i.e. unique tiles) goes up. You’ll notice most of the example bitmaps on the github page only have a few colors in them. Running this algorithm on something with thousands of colors would not work without further optimization.

- Guaranteeing gameplay usability would be another challenge, but luckily this algorithm is pretty flexible with constraints. Theoretically any constraint system could be used.

I’m satisfied with where I’m at with this specific texture synthesis project, but I’ll definitely have this algorithm in my mind with other game projects I may work on. I am kind of curious if a good tile-based game level could be generated with this.

![]()